This journal entry consists of the final outcome of our MI project.

My partner for this Module was Denisa.

The final version of our code consists of interaction with the computer (expression) through free gestures by touching shoulders and wrist, and an ambient impression of sound back to the user.

Here’s a guide through of the portions of code that we have modified:

First, we implemented two different audio files, one that’ll be the output for one interaction and the other for the latter.

Then we started by experimenting with just the shoulders first. Taking the positions of two shoulders from two people, we calculate the distance between and as the distance gets smaller, the interconnected shoulderDiff value lowers as well which then manipulates the audio volume because the audio volume is controlled by shoulderDiff / 300 (so that value remains between 0 to 1).

At first, we had trouble understanding how targeting an index for the poses worked (poses represents person detected I assumed). After reading it a little more, we understood that we had to follow the parameters in getKeypointPos( ) to properly place the indexes behind, like getKeypointPos(poses, ‘leftShoulder’, 2);.

With this final outcome, we were aiming for both surface free expression and impression modalities. We quickly realized that having a screen to show feedback at all binds the impression to a surface so we removed the camera feed,

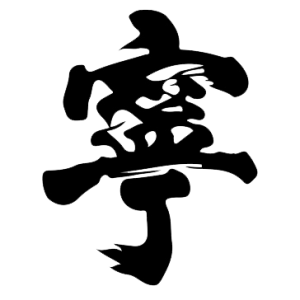

and replaced the screen with a simple gif that plays while everything goes on, not interconnected with bodily movement or anything that’s going on behind the code at all.