Prior to the kick-off of the first module I read a little about Interactivity just through browsing on the internet with no valid sources about the term “interactivity”. Techopedia defines interactivity as the “communication process that takes place between humans and computer software”. And for the most part, other sources have defined interactivity as something closely related to the interaction with computers or machines with a UI (user-interface). With this course, I hope to understand the concept of interactivity or quality of interaction more and hopefully gather more knowledge on the concept of interaction.

Module I is introduced as FIELDS + COMPUTER VISION

The concept of “Faceless Interaction” is introduced, and discussed in the literature written by Janlert & Stolterman.

An interface is often defined as:

- Surface of contact between things

- Boundary of independent things

- Control

- Expression and Impression

The first two generally imply physical contact, as it illustrates how one or more objects come in contact and meet (eg. How the whiteboard meets the tip of a marker). So the first two generally focuses on what could be designed at that specific meeting.

The 3rd and 4th points allows for more “faceless” interaction.

Control is often thought about in UI design, when you create widgets to control behaviour, monitor behaviour, or exert influence on something (eg. A button to control entire project wall, screen, dim lights in classroom). And why does this allow for more “faceless” interaction? The concept of faceless is to somewhat challenge the fact that an interaction requires an interface. So the question here is whether it is possible to create something that “blends in” with the environment, if one can interact with a system or object without directing interaction or focus?

Expression aims to emit some sort of feeling, and Impression is to have something impact on us in a way that it’s nuanced. These can also be incorporated and thought about when an interaction is faceless.

Next, Clint introduced faceless interaction fields. Like mentioned above, the idea of a “faceless” interaction is to somehow blend in your actions with the environment, automating, and doing away with using an interface at all as the world itself is an interface. Like ubiquitous computing (Weiser 1988), “the technology recedes to the background of our lives”.

In addition to Ambient/ Pervasive/ Ubiquitous Computing, it’s important in a faceless interaction that interaction is not directed. Directness is typically how things are for us when we interact with things as the interaction you’re participating in becomes the focal-point. When an interaction is not directed, however, it becomes less focused and more immersed.

Lastly, a ripple-like response is involved as the action is picked up depending on the purpose of object like ripples (eg. After reaching a certain amount of activity in a room, the A.C turns on).

One can argue that an interaction without utilizing a physical UI is a faceless interaction (like the Amazon Echo) but a Voice UI is still considered a directed interaction that takes focus = not exactly faceless. Clapping once to turn on a light switch is the same concept as a button but simply doing away with a physical UI, it’s still directed!

Key points on Faceless interaction

- Users immersed in an environment which they’re not conceived as interacting with particular

- Targeted objects moving in situational and interactional “force-fields”

- Causing minor or major perturbations

- Guided, buffeted, seduced, affected

- More environmental

Introduction to Computer Vision

Computer Vision, in other words, Computational video stream processing, “sees” things of relevance in the stream, and not as series of images. It’s classically utilized for security purposes, facial recognition (processing images, colors, shapes, AI techniques), manufacturing (production lines, cameras to pick up manufacturing faults), and logistics (scanning packages). Recently it’s commonly used in apps that utilize facial recognition: FaceApp, Snapchat face-swapping and lenses, etc.

Why is Computer Vision important? or relevant?

We’re giving a computer “vision”! Computer vision does a great job at seeing what we tell it to see unlike human vision in detail, and interpret all the information at once. Computers are getting smarter and smarter, so smart they’re taking up tasks that humans can’t, or they’re making it so much easier for us. Computer vision seeks to automate tasks that the human visual system can do. Computer vision essentially helps computers “see” and gain high-level understanding of digital images, like photographs and videos!

In addition to all I’ve mentioned above on what computer vision can possibly perform, there are so many others it’s capable of!:

- Automated Traffic Surveillance

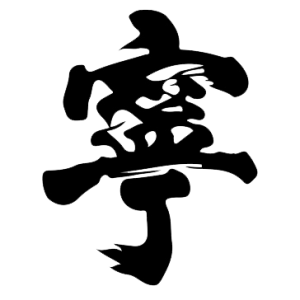

- Optical Character Recognition (OCR): Recognizing and identifying text in documents, a scanner does this.

- Vision Biometrics: Recognizing people who have been missing through iris patterns.

- Object Recognition: Great for retail and fashion to find products in real-time based off of an image or scan.

- Special Effects: Motion capture and shape capture, any movie with CGI.

- Sports: In a game when they draw additional lines on the field, yup computer vision.

- Social Media: Anything with a story that allows you to wear something on your face.

- Medical Imaging: 3D imaging and image guided surgery.

Kick-off for Camera Stream (which is basically what we’re going to work with in Module I)

In a Camera Stream, videos can be processed as a stream of individual frames.

In each frame is an image- or a 2D array of pixels. The pixel data is in RGBA (A representing alpha, or transparency) and RGBA are each a byte (number) between 0-255.

So! For this upcoming module we are to play with the camera stream, tinker with the code provided in the form of tutorials, and create something fun & cool!

My partner for this Module is Denisa.

This module sounds simple but could be extremely challenging as well. First of all, the given instruction are extremely abstract. As we must avoid setting targets and goals. That means there isn’t a specific “goal” we’re aiming towards, we’re just supposed to play around and learn. We shouldn’t establish any concepts or scenarios (which you’ll see we made a mistake by doing so in the next entry). Through tinkering, trial and error, we reach a destination.

I will be taking photos, screen recordings, and such to document my process of tinkering.